[A colleague recently posed the following rhetorical scenario about how to deal with a situation that presumably requires upfront estimates, to which I responded internally. I’m republishing it here, as I think it’s a common question, especially for service providers who are perhaps skeptical and/or misinformed about NoEstimates techniques and philosophy.]

A market opportunity demands that I have a gismo built by a certain date. If delivered after that date the gismo development has absolutely no value because my competitor will have cornered the gismo market. If I miss the date, Gismo development becomes sunk cost. I’m looking to hire your top-notch development team. Before I do I need to know the risk associated with developing gismos by the compliance due date to determine if I want to invest in gismo development or place my money elsewhere into other opportunities. I need to understand the timeline risk involved in hitting that date. Your team has been asked to bid for this work. It is a very, very lucrative contract if you can deliver the gismos no later than the due date. Will you bid? How will you convince me that I should choose your team for the contract? How will you reduce my risk of sunken cost of gismos?

Let me take each of these questions in turn, since each is important and related but independent of the others.

Will you bid?

This depends on factors like potential upside and downside. Given the uncertainly, I would — in the spirit of “Customer collaboration over contract negotiation” — probably try to collaborate with you to create a shared-risk contract in which each of us had roughly equal upside and risk shared. Jacopo Romei has written and spoken on this topic much more comprehensively, eloquently and entertainingly.

How will you convince me that I should choose your team for the contract?

This is relatively orthogonal (or should be) to the question about estimating, though the subtext — that the appearance of certainty somehow credentializes someone — is commonly conflated with ability to deliver. In a complex, uncertain world, you probably shouldn’t trust someone who makes promises that are impossible to follow through on.

I would “convince” you by creating a trustful relationship, based on how fit for purpose my team were for your needs. Though I wouldn’t call it “convincing” so much as us agreeing that we share similar values and work approach. Deciding to partner with my firm is a bit like agreeing to marry someone: We conclude that we have enough shared goals and values, regardless of the specifics, and trust each other to work for our mutual benefit. For instance, two people may agree that they’d both like to have children someday; however, if in the unfortunate scenario that they can’t, it needn’t invalidate the marriage.

How will you reduce my risk of sunken cost of gismos?

This is probably the question that most relates to the question of understanding when we’ll be done. In addition to the shared-risk model (see above), we have a few techniques we could employ:

- Front-load value: Is it possible to obviate an “all or nothing” approach by using an incremental-iterative one? Technical practices like continuous-delivery pipelining help mitigate the all-or-nothing risk. Will delivery of some gismos be better than none?

- Reference-class forecasting: Do we have any similar work that we’ve done with data that we could use to do a probabilistic forecast?

- Two-stage commitment (Build a bit then forecast): Is it possible to “buy down” some uncertainly by delivering for a short period (which you would pay for) in which we could generate enough data to determine whether you/we wanted to continue?

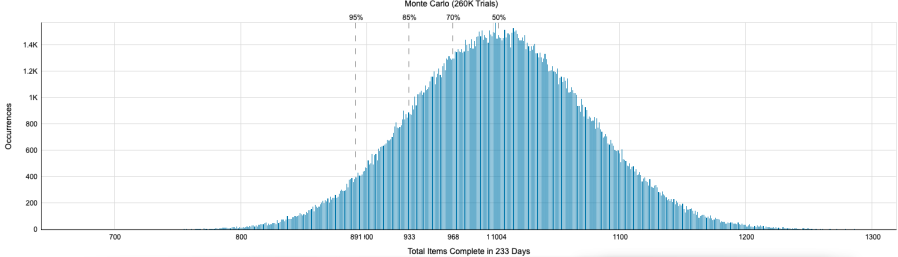

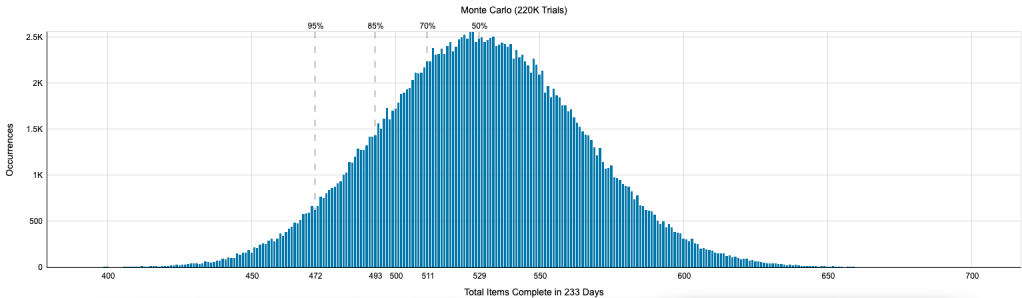

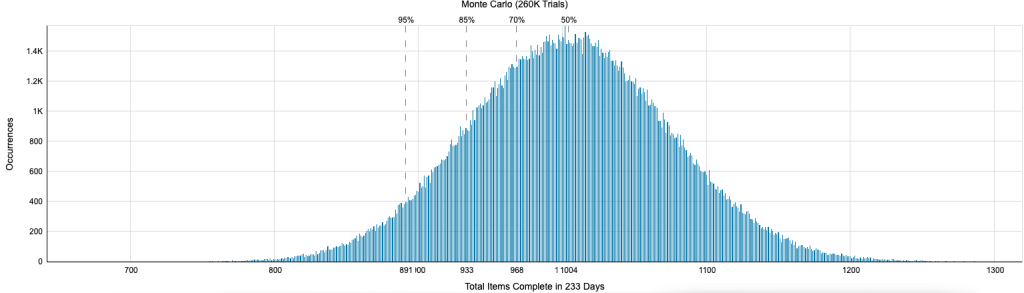

Reference-class forecasting: Reference-class forecasting is “a method of predicting the future by looking at similar past situations and their outcomes.” So do we have any similar work that we’ve done with data that we could use to do a probabilistic forecast? If we do, I would run multiple different forecasts to get a range of possible outcomes. My colleague’s scenario is about building a software gismo, so if we’ve built other things like this gismo, we would use that past data. Maybe we’ve done three similar kinds of projects. The scenario is a fixed-date problem: Could we deliver by a date (let’s say it’s Christmas)? Here are some forecasts then we would run with data from previous work:

Project A

Project B

Project C

Now we can pick our level of confidence (that is, how much risk we’re comfy with) and get a sense. Say we’re very conservative, so let’s use the 95th percentile:

- Project A: at least 584 work items

- Project B: at least 472

- Project C: at least 891

So we have the range based on three actual delivered projects that we could do, very conservatively, at least 584 things between now and Christmas. Furthermore, we might also know that each of these projects required some number of work items (300? 2000?) to reach an MVP. That info would then help us decide whether the risk were worth it.

Two-stage commitment (Build a bit then forecast): Is it possible to “buy down” some uncertainty by delivering for a short period (which you would pay for) in which we could generate enough data to determine whether you/we wanted to continue? So maybe we’ve never done anything like this in the past (or, as is more likely the case, we have and were simply too daft to track the data!). The two-stage commitment is a risk-reduction strategy to “buy some certainty” before making a second commitment. Note that this approach protects both the supplier and the buyer.

In this case, we would agree to work for a short period of time — perhaps a month — in order to build just enough to learn how long the full thing would take. For the sake of easy math, let’s say it costs $1m each month for a team. Would our customer be willing to pay $1m to find out if he should pay $8m total? Never mind the rule of thumb that says if you’re not confident that you will make 10X your investment, you shouldn’t be building software. Most smart people would want to hedge their risk with such an arrangement. So this approach lets us run the forecast as soon as we’ve delivered 10 things, so here’s an example of the forecast:

Same idea; we are now basing this on the data from the actual system that we’re building, which is even better than the reference-class approach. Note also that by continually reforecasting using data we could decide to pull the plug and limit our losses at any time, whether that is a month in, two months, etc.

Better questions to ask

People propose rhetorical scenarios like this one with a certain frame. Getting outside of that frame is one of the first challenges of this new way of thinking. It’s not that NoEstimates proponents don’t care about when something will be done (rather, assuming that the information is helpful, it’s just the opposite — we use techniques that rightly portray non-deterministic futures) or are flippant with respect to cost. (For those times when I have come across that way, I humbly apologize.) Rather, we want to offer a different thinking model, such that we ask different questions in order to reframe to bring us to a more helpful way of approaching complex problems, like:

- In what context would estimates bring value, and what are we willing to do about it when they don’t? (Woody Zuill)

- How much time do we want to invest in this? (Matt Wynne)

- What can you do to maximize value and reduce risk in planning and delivery? (Vasco Duarte)

- Can we build a minimal set of functionality and then learn what else we must build?

- Would we/you not invest in this work? If not, at what order-of-magnitude estimate would we/you take that action?

- What actions would be different based on an estimate?

And when we do need to know some answers to the “When will it be done?” question, we prefer to use practices that give us better understanding of the range of possibilities (e.g., probabilistic forecasting) rather than reflexively take as an article of faith practices that may not yield the benefits we’ve been told about. That’s why I always encourage teams to find out for themselves whether their current estimating practices work: Most of the time — and I publish the data openly — we find that upfront estimates have very low correlation with actual delivery times, meaning that, as a predictive technique, they’re unreliable (Mattias Skarin found a similar lack of correlation in research for his book Real-World Kanban). It’s not that NoEstimates people are opposed to estimating; it’s that we are in favor of practices that empirically work.